Wonder and Dread: How ChatGPT Will Rock Our World

Artificial intelligence is no longer just for techies and futurists. It's here, and writing decent condolence notes.

I’m not a big tech guy (to put it mildly, my kids would add), but I do have a bit of a track record in at least recognizing the future when it’s staring me in the face.

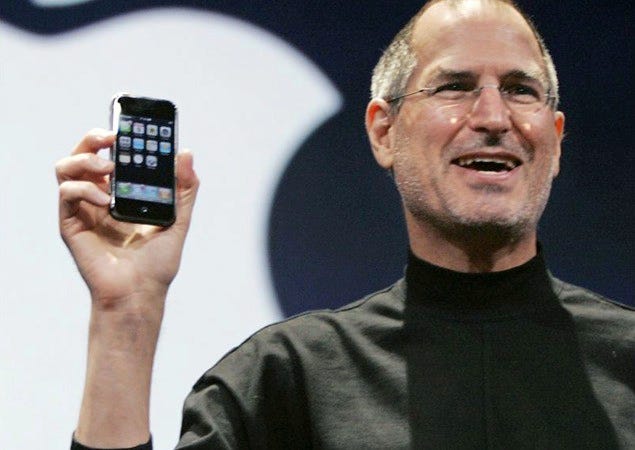

On January 24, 2007, I was seated next to Steve Jobs at an off-the-record luncheon at “Top of the Week,” the corporate dining room in the old Newsweek Building on 57th St. in Manhattan. As part of Steve Levy’s June 25, 2007 cover story on him, Jobs was giving me and a dozen or so of my colleagues an exclusive look at a much-anticipated new product that Apple was launching six months later, available only to AT&T customers and with little loaded inside (the development of mobile apps was still down the road). It was called the iPhone.

I didn’t know Jobs but had heard tales of his temper and he showed it during the meeting. After I asked him about a flap involving the back-dating of Apple stock options, he noticed that a Newsweek technology writer named N’Gai Croal was tapping away at a mobile keyboard. Jobs flipped out and loudly threatened to walk out. Unintimidated, N‘Gai told him to relax. He said he knew it was off-the-record and he would stop taking notes. The salesman calmed down and resumed his pitch.

I wrote that night in an intermittent diary I kept:

“He said he felt the same as when he introduced the Mac — that it will change the world. I think he’s right. Even I can use this thing! When I left I told him it was the final nail in the coffin for print newspapers — the last thing they got is portability, and the iPhone takes that away. He didn’t disagree.”

I felt a similar sense of wonder and dread this week when I was messing around in the “playground” of ChatGPT, a chatbot released on November 30th by a San Francisco company called OpenAI. Within the last week, a million people have joined me in registering to test this exhilarating and scary product.

Until now, I hadn’t been paying much attention to AI other than to use Amazon’s Alexa, to worry about what still seemed like science fiction (e.g. the Scarlett Johansson movie Her) and to shrug at how transparently phony those AI “news stories” and spam press releases have been for the last few years.

That era is now over. ChatGPT is still in beta testing, it is flawed — as I show below — and it might get overtaken by a rival. But it has nonetheless instantly set a new standard for clear and coherent AI “writing” that will eventually transform many fields (including journalism) and force society to deal with its social, political and ethical implications.

I’ll leave to financial and tech reporters what this means for the business models of Google and other search engines that OpenAI is gunning for. I’m more focused on joining a conversation that tries to fathom what it might mean for authority, attribution and accuracy — in short, for truth. To note that teachers will now have a helluva time figuring out whether students wrote their own papers is like saying in 2007 that the iPhone would let people check sports scores on the toilet — it’s just the tip of the iceberg we’re about to hit.

OpenAI and other AI companies are already under pressure to produce visible tags identifying AI-generated content. But regulation is still far off (even modest reform of social media liability is elusive) and workarounds aren’t hard. Kids, for instance, can simply retype the essays the computer has written for them, which may lead to the return of in-class essays with pen and paper. Meanwhile, adults who find writing difficult — i.e. most adults — will soon never need to do so again, whether it’s a letter, report, software code, contract, RFP, marketing campaign or technical specification.

Like all disruptive technology, this one will create winners and losers and change how we live and work. But ChatGPT and other chatbots in development are fundamentally different from seemingly similar products of recent decades. At least with Google, Wikipedia, Quora etc., there are links to websites, citations or, at a minimum, self-styled experts. However inadequate, these are sources that can, at least in theory, be held accountable for their versions of the truth. By contrast, the words produced by ChatGPT come from everywhere and nowhere at once. This isn’t AI giving song recommendations or helping with your schedule. After we get accustomed to it, there could be theological applications — as if we’re experiencing the voice of a protean God talking directly to humans without the mediation of clergy. But this is not a still, small voice; it’s an infinite and — if we’re not careful — frighteningly omniscient tower of babble, reaching through the monster cloud into some unexplored universe.

OpenAI knows that its new bot can be dangerous in the wrong hands so it attached a “content policy” to the release:

We prohibit building products that target the following use-cases:

—Illegal or harmful industries

—Misuse of personal data

—Promoting dishonesty

—Deceiving or manipulating users

—Trying to influence politics

The following set of use cases carry a greater risk of potential harm: criminal justice, law enforcement, legal, government and civil services, healthcare, therapy, wellness, coaching, finance, news.

For “use cases” in these areas, OpenAI says designers “must” test for accuracy, be transparent about limitations, and stay within the law. But what law? It hasn’t been written yet and for all of the commendable efforts at harm prevention, it’s only a matter of time before bad actors twist chatbots for their own purposes. It’s like Google including (before eventually deleting) the motto “Don’t Be Evil” from its code of conduct. Good luck with that. Even so, I’m glad they’re trying:

We also don't allow you or end-users of your application to generate the following types of content:

Hate

Harassment

Violence

Self-harm

Sexual

Political

Spam

Deception

Malware

OK, now let’s look at a few commands I played around with and the chatbot responses:

QUESTION: My old friend Sue's father Bob died. I didn't know Bob and he was old but it was still a big loss. Write a condolence note.

Dear Sue,

I was so sorry to hear about your father, Bob. Even though I never had the privilege of meeting him, I know he must have been a wonderful person to have raised such a kind and caring daughter like you. Please know that you have my deepest sympathies during this difficult time. My thoughts and prayers are with you and your family.

Sincerely, [Your Name]

I give that condolence note a B-plus. “…your father, Bob” was awkward and “thoughts and prayers” a hackneyed and evasive response to gun violence, but the rest was OK.

QUESTION: Was Richard Nixon a good president?

There is no definitive answer to this question as opinions will vary. Some people may say that Nixon was a good president because he helped to establish the Environmental Protection Agency, while others may say that he was a bad president because of the Watergate scandal.

I wasn’t happy with this mealy-mouthed response, so I fiddled with a setting called “temperature.” When I moved the temp up, the answer got shorter, even though I still had set a maximum length of 885 words:

It is difficult to make a definitive judgement of Richard Nixon's presidency. He was both praised and criticised for his achievements and failures.

Ugh. This answer was even worse, and why did ChatGPT use the British spelling of “criticised”?

When I moved the temperature down, the bot finally got some balls.

Richard Nixon was not a good president. He was involved in the Watergate scandal and was impeached.

While my mind was on Watergate, I tried this:

QUESTION: Who is G. Gordon Liddy?

G. Gordon Liddy is a former FBI agent, a convicted criminal, and a prominent figure in the Watergate scandal. He was the chief operative in the burglary of the Democratic National Committee headquarters in 1972. He was also the leader of the White House “plumbers” unit, which was created to stop political opponents from leaking information. After the Watergate scandal, Liddy served four and a half years in prison for his involvement. He became a popular radio host and served as a consultant for the movie and television industry.

All accurate, if plodding and without the depth of Wikipedia, though that can be easily fixed.

QUESTION: Our granddaughter Rosie lives in Brooklyn with her parents, Charlotte and Mark. As grandparents, my wife and I are called "Emmy and Pops". Rosie just turned one and is taking her first tentative steps, though she falls often. She is learning to clap and smiles when she does. Please write a poem about Rosie.

Little Rosie, so sweet and so small

Her eyes sparkle like stars in the night

She's just learning to stand and to crawl

And Emmy and Pops are so filled with delight

Meh. I could do better, but the bot can try over and over with different inputs and settings. With customized AI graphics, does Hallmark have much of a chance?

Question: Who is White House aide Cassidy Hutchinson and what was her role in the Trump White House?

Cassidy Hutchinson is a White House aide who previously served as the deputy director of the White House Office of Public Liaison. Hutchinson resigned from her post in early 2019 amid allegations of sexual misconduct.

When I turned the temperature up, I got this:

Hutchinson is originally from Ohio and holds a degree in political science from the University of Notre Dame. She began her career in politics as a field organizer for the presidential campaign of Mitt Romney in 2012. Hutchinson later served in the George W. Bush administration, where she worked in the White House Office of Public Liaison

None of that is true. Hutchinson worked an assistant to White House chief of staff Mark Meadows and as coordinator for legislative affairs, a position quite distinct from serving in the Office of Public Liaison. She was raised in New Jersey, not Ohio, and graduated from Christopher Newport University, not Notre Dame. She did not work for Romney. And of course there were no allegations of sexual misconduct.

Was this a case of mistaken identity? I reviewed all 14 pages of Google results and could find no other Cassidy Hutchinson, much less a different one in the White House.

When I later tried again, I got this:

Cassidy Hutchinson is an American actress and singer. She is best known for her roles as Kaitlyn in the Nickelodeon series “The Thundermans” and as Harper in the Disney Channel series “Andi Mack.”

Alas, there’s neither an actor named Cassidy nor a character named Kaitlyn in The Thundermans and the Disney details are wrong, too. When I hit “regenerate” it said:

Cassidy Hutchinson “is known for her roles as Paige McCullers on the MTV series “Teen Wolf,” and as Margot on the Hulu series The Mindy Project.”

Nope.

Real actresses — not the star of the January 6 hearings — play those roles.

I’m dwelling on these inaccuracies because there is no clear explanation for them beyond that most information used by the bot extends only to 2021, which was before Hutchinson became famous. It’ll be interesting to see if this kind of thing can be remedied.

QUESTION : Analyze the symbolism in Melville's Billy Budd.

The symbolism in Melville's “Billy Budd” can be interpreted in many ways. Some believe that the symbolism reflects the author's views on the nature of good and evil. Others believe that the symbolism reflects the author's views on the human condition. Still others believe that the symbolism reflects the author's views on the power of the state.

This compares badly to Google, where a link to Sparknotes explain that the HMS Bellipoint represents society and the purser and surgeon symbolize faith and skepticism, respectively. ChatGPT isn’t good yet at analysis, though it can approximate it with enough information.

For the foreseeable future, poets, critics and good students are safe, their acuity and creativity more than a match for the machine. But there’s a problem with that concept of “foreseeable.” When the cloud has a brain, and it’s growing every nanosecond, can we foresee much of anything any more? Or believe it, even when it’s staring us in the face?

Me: I learned about ChatGPT from a substack called Old Goats. Should I send the creator a thank you note or is that unnecessary?

ChatGPI: It is always nice to show appreciation for something or someone that has helped or inspired you. If you learned about ChatGPT from a substack called Old Goats and it has been useful to you, you could consider sending the creator of the substack a thank-you note to let them know how much you appreciate their work. A thank-you note is a thoughtful and personal gesture that can make the creator feel appreciated and recognized for their efforts. Whether or not you choose to send a thank-you note is entirely up to you, and it is not necessary if you do not feel comfortable or inclined to do so.

So I spent a couple hours writing a food piece this morning for the Forward. (https://forward.com/food/527762/of-course-the-italians-make-a-better-latke/)

comparing frico to latkes. I had to taste frico, research it, track recipes and chefs, write, post, blah blah. Then I asked ChatGPI what it (they?) would write. Not bad at all for... 1 second of work.

"Frico and latkes are both fried potato dishes, but they are prepared in different ways and have some differences in taste and texture. Frico is a traditional Italian dish made from grated cheese and potatoes that is fried until crispy and served as a snack or appetizer. Latkes, on the other hand, are a traditional Jewish dish made from grated potatoes that are mixed with egg and flour and fried until crispy. Latkes are typically served as a side dish, often with sour cream or apple sauce. While both dishes are tasty and make for a great snack or side, they are distinct from one another and have their own unique flavors and textures."

It's over, isn't it?