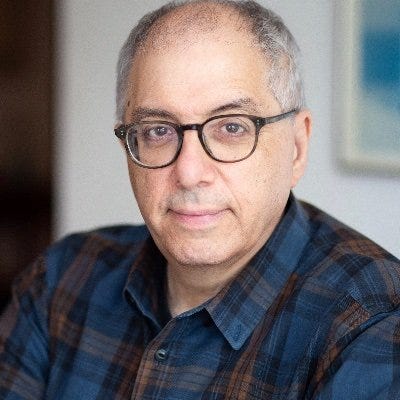

I’m doing something a little different this week. Instead of interviewing Steven Levy, the dean of American technology writers, by myself, I’ve asked Meredith Stark, my partner and producer on Old Goats, to take part. Meredith, who launched and ran CBSNews.com, Gartner.com and then CNBC.com, is more knowledgeable than I am about digital media and brings a lot to the rumination. Steven, with whom I overlapped at Newsweek for a dozen years, is one of my close friends. Starting with Hackers in 1984, he is the author of eight first-rate books on technology (including two on Apple, one on Google and one on Facebook) and currently serves as Editor-at-Large at Wired, where he writes a column. I’ve also included below a column by Edward Kosner, with whom I ruminated last year, on the hot topic of gate-keepers. Ed was editor of Newsweek, New York Magazine, Esquire and the New York Daily News and knows a thing or two about what we’ve lost.

JON:

In your column you wrote you're gonna follow Elon Musk with a bag of popcorn and a crash helmet. What did you mean by that?

STEVEN LEVY:

That was a couple of weeks ago and I've been replenishing the popcorn and the crash helmet has a few dents in it. It's wildly entertaining. He’s talking about the virtues of free speech. On the other hand, he’s illustrating some of the drawbacks of the free exchange of ideas by posting objectionable content himself and it’s a little alarming that a mega billionaire is going to have so much power over a speech platform.

JON:

He tweeted that the Democrats are the extremist party—very alarming.

And he's kind of a hypocrite on free speech. He's into NDAs, and he's into retribution against whistleblowers.

STEVEN LEVY:

We've got this mega billionaire admitting that he’s buying a business but not for the business. Elon talks about being bullied as a kid and now he's bullying people. He's not bothered by these contradictions. It's a jaw-dropped situation by a jaw-dropping person.

JON:

So Meredith, does that conform to your take too?

MEREDITH STARK:

Whether I am happy about it or not, I must admit that I find the theater fun to watch.

Steven, what do you think is the difference in impact between Elon Musk buying Twitter and Jeff Bezos buying The Washington Post?

STEVEN LEVY:

Well, I think Bezos is in pretty much familiar ground. The Washington Post is an established publication. Twitter is a controversial new medium. In terms of editorial, Marty Baron [the longtime Post editor] never said, “Jeff Bezos reached in and made [me] write this or that.”

MEREDITH STARK:

There were similar concerns at the time with Bezos buying the paper.

STEVEN LEVY:

There was a lot of hand wringing, and rightfully so. All our big media is run by billionaires. Look at Rupert Murdoch. But none of that is new. We have a tradition of whoever owns the barrels of ink gets to have a big influence on society. Social media is also dominated by billionaires, but it has a different set of problems. The question is, do you want well-intentioned owners trying to grapple with those issues, or do you want someone —or are stuck with someone—who says, “I don't care about these problems, I value free speech.” Twitter once declared itself the Free Speech wing of the Free Speech party, and then that turned out to be not a good thing to do for business or for society. And they had to understand how moderation works and how to keep some of the worst speech off their platform, even though it might be legal.

We're not sure how Elon is going to deal with this. I suspect that since he’s a smart person, who knows how to deal with a situation when the first ten launches of a spaceship don't land upright on the launch pad, that he might adjust [his plan]. So I'm not in full panic mode. It's a unique and somewhat alarming situation that to me is tempered by the fact that he's ultimately an engineer, and will certainly apply some of those values to the problem.

JON:

His first position — and he said this repeatedly in the last few days—is that his moderation will only come into play if somebody's breaking the law, that he believes that the law is the line.

STEVEN LEVY:

I don’t believe that. I don’t believe that he will not take down hardcore porn.

JON:

He has also said that he’s going to take down bots or die trying.

STEVEN LEVY:

Twitter has made some progress on that. Some bots are okay, right? If you're a business and you're scheduling things to promote your product, that’s a kind of bot. You don’t want to totally take down bots. Elon also said he wants to authenticate all humans. Not quite sure what that means.

MEREDITH STARK

Full authentication comes with its own set of pitfalls. What happens to all that [personally identifiable] data and what do they do with it?

STEVEN LEVY:

It’s a pretty good idea to give people the option to authenticate themselves in a low-pressure way—to say I am who I am, and then give users the option not to see things from people who aren’t authenticated, or to not let those people reply to your tweets. So if I'm a female journalist, and I want to keep my feed open to reader comments and potential sources, I can actually limit access to a degree by saying, anyone can reply to my tweets, unless you don't fess up to who you are. Maybe then you're not going to threaten to rape me, because if you do, you have to do it under your own name. And that might not be a good look for your employer.

People are talking about tying content moderation schemes to the blockchain in the ether. Elon is a big fan of that. And Twitter's a big fan of that. They were exploring different uses for this already. There's a lot of idea thrown around about how you’re going to be able to choose your own way to moderate content. But that implies that Twitter will be able to successfully label the kinds of tweets that you don't want to see—or you want to promote more—and that approach requires a lot of energy and creativity and is ultimately going to be imperfect. That's not a simple problem.

MEREDITH STARK:

Is the technology today ready for that scale? I know [Musk] has a reputation for solving big problems, but could he really do this now?

STEVEN LEVY:

The great thing about innovators like Elon is that when people say, “the technology isn't ready to perform a certain feat,” they say, “I'm going to assume that it is possible, and then they build something that turns the trick.” There’s a lot of headwinds against forcing people to make their own choices about what level of speech they want to see in their feed. People don’t like to sign on to a service and immediately have to answer questions like, “What choices do I have to make? What algorithm will I use?” It’s an utter truism in technology that the vast majority of users of any service will not change the default setting.

MEREDITH STARK:

We went through all those cycles of personalization in “my news” and “my Yahoo.” Nobody ever did it.

STEVEN LEVY:

So the idea that you can solve that by making users choose their tweets is window dressing. It’s a plausible fix in the mind of certain people who are fanatic about these services. But most people aren't going to use it.

JON:

So let me ask you guys both about this concept of algorithm transparency. What does that mean? And might it become contagious and put pressure on Facebook and other platforms to do the same? To show a little more of what's behind the curtain.

MEREDITH STARK:

On a consumer level, I want to turn that into English. I want to know what the rules are. I want to know what can/can’t I post? Users today can be banned or put on pause, and don’t really understand their “crime.” The messaging from the social companies on this issue is awful and inconsistent. There needs to be clarity of rules, clearly laid out and available. I know people in media are asking to understand the algorithm, but I think users just want to understand the guidelines and boundaries.

STEVEN LEVY:

I'm not sure what Elon means when he says total transparency. There is policy transparency, where Twitter can crisply tell you why this tweet can't stand and some other tweet can. And then there's algorithmic transparency. That’s what determines what shows up in your feed. Feeds used to be purely chronological, which was 100 percent transparent: If I follow a group of people, and if someone in the group posted something a minute ago, it would be one of the first things I see, just like you're dipping your gourd in a river. And if you opened the app and a tweet was posted earlier, it's gone to you. Then they realized maybe people want to see things that the users they follow tweeted earlier. Maybe you want to see this other tweet from someone you know, that a lot other people liked.

So when people complain about the algorithm, what they're really saying is, “What are those rules that determine what you see? And what is the risk of sharing those rules publicly?” The downside of that transparency is that if people know the algorithm, they can game it.

JON:

What do you mean by that?

STEVEN LEVY:

Let's say there's a word Twitter has flagged, like Ivermectin, because you might be boosting it. Twitter might say, “Gee, Ivermectin is a signal for vaccine misinformation, and our algorithm is not going to show those tweets because they’re probably promoting a falsity about vaccines.” So then people adapt, and put a little asterisk to replace one of the vowels of the word, or misspell the words intentionally. So that's the way people figure out how to game the algorithm.

If all the rules of the algorithms are published and made open, then bad actors will have a very sophisticated understanding of how they can post content which is otherwise going to be downgraded. Or they'll figure out what kinds of things get boosted by friends or a bot or figure out what certain kinds of people are retweeting.

MEREDITH STARK:

The purpose of the algorithm for them is to drive engagement. The more you consume, the better their business. It’s created to make you come back for more, spend another minute, look at one more tweet, one more ad. So sometimes that comes with not a great alignment with what's good for society. It's good for Twitter and its business.

If Elon leans into tweaking the algorithm, plus opening the site to very little moderation, what do you think the impact will be on the advertising model? Advertisers are the bulk of their revenue. How are they gonna feel about an open Town Square with no moderation, having their advertisement directly adjacent to content craziness?

STEVEN LEVY:

If users open their Twitter feed and a tweet is showing hardcore porn, that’s not an environment that advertisers want to be involved in. That's why I'm thinking that even though that's legal, I don't think we're gonna see that.

Maybe Elon will go as far as to say that users have to specifically opt in before Twitter shows them everything, even the ugly stuff. Google does that in their search engine. I think that he's going to experiment, with his North Star being more speech and free speech.

JON:

A lot of people think that Musk should have spent some of that $44 billion on media literacy or other things that could help train people to be more skeptical about what they're seeing and reading. At least he gets that editing your tweets is a really good idea. It's crazy that it's binary–either leave it up or take it down. If you take down your tweet, then everybody gets really suspicious.

STEVEN LEVY:

The argument against the edit button is that you'll kind of gaslight people, you'll put something up and then edit it.

MEREDITH STARK:

Then say, “I didn't say that. I said the opposite.”

STEVEN LEVY:

Right, Maybe the blockchain can provide an audit trail for edits. Not my original idea, by the way.

JON:

So Ben Shapiro tweeted:

Not withstanding Musk’s “extremist democrats” tweet, doesn’t it sound like the right wing is setting themselves up for a massive disappointment?

STEVEN LEVY:

Some people are saying Elon’s changes will make Twitter one of those right wing social networks, like Gettr or Truth Social. But one of the reasons that Twitter is so attractive to the right wing is that they can agitate all the left wing people on the service. If they're talking to themselves, they can't really annoy anyone.

The toughest audience in every social media company now is their employees, who are unhappy with misbehavior, and generally support policies which reduce harmful speech. Many of them are being courted constantly by other companies and startups, and they like to work for a company they feel good about. Some people are excited. Elon is a hero. And they're saying, “Wow, this is so cool.” Other people are saying, “Hold on one second. I don't feel comfortable being associated with someone who's going to basically come in to give harmful speech a bigger platform.”

JON:

Let’s talk about the bigger issue of gatekeeping. So, you wrote recently that you've had a change of heart about gatekeepers? What did you mean?

STEVEN LEVY:

When the internet came along, a lot of people celebrated because they said we're going to break the grip of the gatekeepers. It used to be that there were three television channels and these powerful publications like Newsweek. There's a lot of downside to those gatekeepers. During the war in Iraq, for instance, Newsweek was wrong. The internet was seen as a way to free ourselves, and I think that was a good thing, and I still think it's a good thing to remove gatekeepers. But the problem with removing gatekeepers is that you still need leaders and institutions to establish what facts are and what truth is, and just not be crazy. Today we have leaders who've given that function up, specifically in the Republican Party. And when you lose that, and also have no gatekeepers, then you're in trouble.

MEREDITH STARK:

There’s also been a rise of an alternate set of gatekeepers, with Don Bongino, Tucker Carlson, Joe Rogan and more. But gatekeepers they are. They are just different from the ones we had with the three networks and the big magazines.

JON:

I’m obsessed with this topic and so is Barack Obama who gave an interview to The Atlantic and a speech at Stanford, where he was very clear that he considers disinformation to be a really serious problem for society. And I noticed that when his book came out, he mentioned Walter Cronkite in every interview, that there was this descent from Walter Cronkite to Tucker Carlson, to what Obama very decorously called “raw sewage in the public square.”

What the RAND Corporation in a report called Truth Decay, is not like tooth decay, which is a mild medical problem. For democracy, it’s as serious as a heart attack, this retreat from truth and facts and the authority structure of news being obliterated and replaced by a Tower of Babel where lies are completely unimpeded much of the time.

So I think we all agree on the problem. The question is, what do we do about it? What if anything can the government do about it and what kind of pressure should be put on billionaires to regulate themselves? Is there any kind of requirement perhaps through amending Section 230 of the Communications Decency Act of 1996? Or is that ultimately a fool's errand?

STEVEN LEVY:

Well, again, I think it's not just the gatekeepers. It's the guardrails that we've lost. I am really surprised that we've gotten here when Trump said to Lesley Stahl, outright, that he wants to make it so people don't know what truth is, that his truth becomes the truth.

I thought, well, good luck with that. Truth is truth, facts are facts. But I was wrong. He actually succeeded. To me, that was the most amazing thing about Trump, that he succeeded in that. He said he could kill someone in the middle of Fifth Avenue and it wouldn’t matter, and he was right. Every day now we learn Trump killed this guy on Fifth Avenue, and we don’t do anything about it. One thing we can do is put people in jail for overthrowing the government.

JON:

I think there's a kind of a fundamental misconception about what the role of the people who own these platforms and run these platforms is. Once they say that they have Terms of Service, they are establishing that there are certain standards, then the argument just becomes, what are those standards?

STEVEN LEVY:

You want them to be treated like magazine editors?

JON:

Yes, they have to have some responsibility for what is in their publication. In other words, you couldn't walk into Newsweek or The New York Times, and say this is my opinion, put it in your paper.

STEVEN LEVY:

I disagree with that. When you are running a platform of user-generated content, you cannot be responsible for the content of every person who posts something. That is different and should be looked at in a different way. Individuals should be responsible for what they say and that's what Section 230 is about.

MEREDITH STARK:

We still have gatekeepers on traditional digital media, on websites, small or large newsletters, apps and podcasts. They are still held to a higher standard. I don't know if it's enforced all the time, but legally, they're accountable. That's what Section 230 does for social media: In one fell swoop—just 26 words —it says to any publisher, you're not held to a publisher’s responsibility for the content on your site if it's produced by somebody else. "No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider."-Section 230 of the Communications Decency Act of 1996.

STEVEN LEVY:

Section 230 also offers an escape valve that allows those platforms to say, “Wait a minute, there's certain kinds of content that would make this website an unfriendly environment.” It allows them to do that kind of moderation without losing their protection against lawsuits.

MEREDITH STARK:

So is it your view that the cat is now out of the bag? There’s no turning back? Almost half of the people in this country used to smoke, and we as a society decided to pull it back, and changed that behavior with regulation and awareness. Times changed and we changed because we understood the impact of smoking. Couldn’t we look at Section 230 in somewhat the same way? It might have made sense in 1996, but now we know the harm it has done and can do to our society, can’t we revisit the topic two decades later to see if it still makes sense after all we know now?

STEVEN LEVY:

Look, if someone comes up with a way to amend 230 and it doesn't destroy its essence, good luck, but I haven't heard of it. Pull out one strand, and it then unravels the whole sweater.

JON:

Martha Minow at Harvard has looked closely at this and she offers some ideas about government regulation, which is only beginning in Europe. Now, people hate regulation, especially conservatives, but once you say, OK, if it's not a magazine, maybe it's more like a public utility, then you start to think how are public utilities regulated?

I just want to mention the nuclear power industry. The only reason that it was able to flourish until the Three Mile Island [nuclear accident] in 1979 is that a senator from New Mexico named Clinton Anderson in the 1950s got legislation through that immunized the industry from lawsuits. In partial exchange for that, the Nuclear Regulatory Commission regulated the hell out of nuclear power because they knew that it was potentially dangerous to public health. I think you could argue that these lies on social media are radioactive. So the question then is, how do we think harder about what kind of regulation there should be? Not government censorship, but maybe something like what the FCC does with broadcast media. Or what local governments do with demonstrations. Musk calls Twitter the public square. Well, you need a permit to give speeches in the public square.

MEREDITH STARK:

Back in 1996, we were just trying to figure it all out. There had been previous cases with Prodigy and CompuServe that hadn’t provided legal guidance to the industry. For me as a publisher, I just wanted to figure out how to put comments on my site to drive a little engagement, and to understand what I would be legally accountable for. Section 230 was created at the time to knock down roadblocks by providing clarity in this area helping to turbo-charge the growth of the industry.

Looking at these issues today is normal and a natural progression of an industry. The automobile was invented in the late 1880’s, but the first traffic light didn’t arrive until 1914; the national highway system not until the 1950s. We need to keep the rules updated for the times.

STEVEN LEVY:

I remember being at Newsweek during that time doing a story about the 1996 Communications Act, talking to Al Gore, and Section 230 wasn’t even a blip in that story. Now, if you want the social media companies to be responsible for all the content on their platform, then they have to go overboard in policing what is said, because otherwise they will get sued constantly.

JON:

Cable TV is not immunized and they seem to have a pretty vibrant debate. They say really harsh things. They're not gagged by the fact that people can sue them. So if Fox isn't immunized, why should Twitter or Facebook be immunized?

MEREDITH STARK:

This is the difference with Section 230 and how it comes into play. We have an example recently where Fox is being sued by Dominion Voting System for $1.6 billion for amplifying conspiracy theories about its technology. What you won’t find in that lawsuit is Dominion suing Facebook or Twitter, who actually spread the story much farther and wider than Fox. Fox can be held accountable legally, but not the social networks for distributing the same content.

STEVEN LEVY:

They do try to edit as much as possible. For COVID they've made huge efforts to tamp down.

MEREDITH STARK:

The selective editing and lack of transparency on where and when it is applied is confusing to people.

JON:

It’s always going to be selective.

STEVEN LEVY:

That's because as much as you belittle the public pressure, Jon, the public pressure forces those places in the open market not to do certain things, and to limit speech on their platform.

JON:

I don't want my position on this to be misrepresented. We should give a wide berth to free speech, but also push hard for them to enforce their terms of service, even if the rightwing screams.

STEVEN LEVY:

But suing them is different. You don’t sue the mail for what it brings.

MEREDITH STARK

But the mail doesn't make the choice whether to deliver you a package or not. The post office or the phone companies are truly carriers like ISPs delivering whatever content comes through their pipes. The socials have been living in the space half-way, and in my view, seem to want the best of both worlds. Sometimes they act as an ISP, but then also as publishers when they review content and decide what to promote or discourage. They hire content creators directly. They just have billions of comments and allow easy sharing.

STEVEN LEVY:

Section 230 says, Okay, we're gonna give you some room to make your platforms a nicer space and to keep certain stuff out, like terrorism, which they do a great job at, by the way. It’s one of the problems they’ve been able to solve because you can identify terrorist speech algorithmically. And your site is not inundated with porn because AI is smart enough to know what a naked body looks like. The only time they run into trouble is when AI targets nakedness like people breastfeeding, or cancer patients showing their treatment for breast cancer. Hate speech, on the other hand, is really hard.

MEREDITH STARK:

Steven, one other question: Trump and the right have wanted to get Section 230 repealed. When I listen to their arguments, I feel like they don't understand what 230 is.

STEVEN LEVY:

Neither does Biden.

MEREDITH STARK:

The right doesn’t understand that in the total repeal of 230, their perception of the practice of shadow-banning and the like would actually get worse [in their view] than it is today.

STEVEN LEVY:

They don’t get the actual implications of outright ending 230. If repealed, you could post something one person has seen and you're just as liable as if 20 million people saw it.

JON:

The regulation I'd like to see and that might be totally unworkable, would somehow stop them from incentivizing heat and lies instead of light. I don't think it's a binary–crazy pie in the sky regulation versus no regulation. Something can be done. It's just very difficult.

STEVEN LEVY:

I promise to solve the problem.

JON:

All right. Sounds good!

Thanks Steven, thanks Meredith.

Bring Back the Gatekeepers!

by Ed Kosner

Resting on the windowsill in my home office is a small blue needlepoint pillow reading “675 IS ENOUGH!,” a gift from a pal. That’s the number of issues of New York Magazine I’d edited in the 1980s and early 90s. Before that, I edited nearly 1300 issues of Newsweek. And after New York, I put out 40 more of the monthly Esquire and more that 1500 editions of the New York Daily News and the Sunday paper. I read every word in every issue of the magazines—including captions—before publication and all the significant stories and columns in the newspaper,

I was a gatekeeper—once a term of respect in journalism, now reviled by some on the left and right as an arbitrary censor of unpopular ideas and controversial information, an enemy of free speech. Yet, never have we needed gatekeepers more as the print press, social media, cable news, podcasts and the rest drown in a chaotic torrent of misinformation, outright lies, suspicion and rancor.

It’s important to remember that the “new media” are really new in the long, imperfect history of journalism. Yahoo was founded in 1994; Facebook a decade later; Arianna Huffington co-founded The Huffington Post—one of the first online publications to publish the topical work of contributors, in 2005. Twitter started tweeting in 2006. CNN pioneered all-news programming as far back as 1980, but for years it was essentially a TV version of the just-the-facts-ma’am Associated Press. Starting in 1996, Fox News and MSNBC gave cable news the partisan edge so prevalent today. Gaining an audience in the mid 2000s, podcasts are now a part of the global mediaverse with Joe Rogan and more than 1.2 million others, and that’s just in English.

There was plenty of misinformation published in what is now considered a golden age of print, the second half of the twentieth century. But the great newspapers, magazines, and the TV and radio networks were renowned for their dictatorial editors and news directors who killed bad ideas and sketchy stories and were merciless to staffers who tried to get them into the publications or on the air.

Not everything printed while my name was at the top of mastheads was National Magazine Award or Pulitzer worthy, and some dopey or meretricious stuff slipped through. But I was vigilant if imperfect at bouncing or spiking material that failed the sniff test.

Now, that media world dominated by the big papers, the news magazines Newsweek, Time and U.S. News, and the half-hour network evening newscasts presided over by anchors like Douglas Edwards, Walter Cronkite, Bob Schieffer, Huntley & Brinkley, John Chancellor, Howard, K. Smith, Peter Jennings and Diane Sawyer (yes, they were nearly all men in those days) is long gone. It has been replaced by a news circus—an unmediated daily onslaught of fact, fiction, partisan slant, inane clickbait, and hate speech directed at anyone with a television set, radio, or internet connection to a smart phone, tablet or laptop.

The heart of the problem is the early decision by regulators that the operators of social media like Facebook, Twitter, TikTok, and Instagram and many of the conduits for podcasts should not be deemed publishers responsible for the material they distribute, but rather utilities like the phone company—essentially pipes carrying whatever their users choose to pump through them. In the same way, cable news hosts are generally treated by their networks as free agents, allowed to spew partisan misinformation or parrot the lies of their favored pols.

In fact, these hosts should be treated as columnists in reputable publications: They are entitled to their opinions, but not to flog flagrant distortions or outright lies. To hold them to such traditional standards and to discipline violations is not a free speech issue, but a journalistic one.

Under fire for some of the hate-speech, Covid misinformation and other atrocities their users have posted, social media sites began monitoring what they transmit, flagging some pernicious material and booting some users (like the 45th President of the U.S.) for serial violations. That’s a start, but a baby step along the long road that needs to be followed. There are many complex issues involved in making the billionaires who own social media sites liable for what they publish,

But there is no question that they are publishers, not the phone company or your local power and light utility.

For most of American history, left- and right-wing radicals, racists and anti-Semites, kooks and grifters had limited access to millions of Americans. In the 1930’s and 40s, Gerald L. K. Smith and Father Coughlin spewed bile over the radio and Charles Lindbergh broadcast his anti-Semitic “America First” opposition to America going to war with Hitler. But other extremists had no choice but to preach to their own choirs. H. L. Mencken famously said “Freedom of the press is limited to those who own one,” and A. J. Leibling echoed the line.

But today anyone with an iPhone can reach uncounted thousands of fellow citizens, lie about important issues and people, spew life-threatening medical or scientific information, post doctored photographs. TV pundits can mislead vast audiences. Many people consider it all “the press” or “the media,” and confidence in a bedrock of American democracy has plummeted. The media malignity is tormenting us as a nation with no commonly-accepted facts on the ground. Instead, we have a babel of unedited voices that favors demagogues and fantasts.

Bring back the gatekeepers!

To me, there's something morally wrong about having $45 billion and using it to buy a social media platform. Maybe Elon Musk is actually what the world deserves.

Yes, navigating First Amendment free speech issues is a rocky road with many potholes, but social media platform regulation seems to be an urgent need. The Nuclear Regulatory Commission/Federal Communications Commission model seems to be a good fit for a public-facing info industry that creates potentially radioactive content and broadcasts it to the masses.